[51] Snowpark on Snowflake vs. PySpark on Databricks: The No-Nonsense Showdown

Puneet, Data Plumbers

Alright, let’s cut through the noise.

You’re here because you’re wrestling with a choice: Snowpark on Snowflake or PySpark on Databricks.

I’ve seen this debate play out in real architectures—Databricks crunching data, Snowflake acting as the final sink.

Why?

What’s the real difference?

I’m diving in, first-person, high-energy, no fluff. Buckle up.

Why This Matters to You

You’re an ambitious operator, or pro.

You don’t have time for tech buzzword bingo.

You need tools that deliver—fast, scalable, cost-effective.

I’ve watched teams burn cash and hours on the wrong stack because they didn’t ask the hard questions upfront. Snowpark and PySpark aren’t just frameworks; they’re bets on your future. Get it wrong, and you’re stuck with a clunky pipeline or a budget black hole. Get it right, and you’re ahead of the curve, shipping value while competitors scramble.

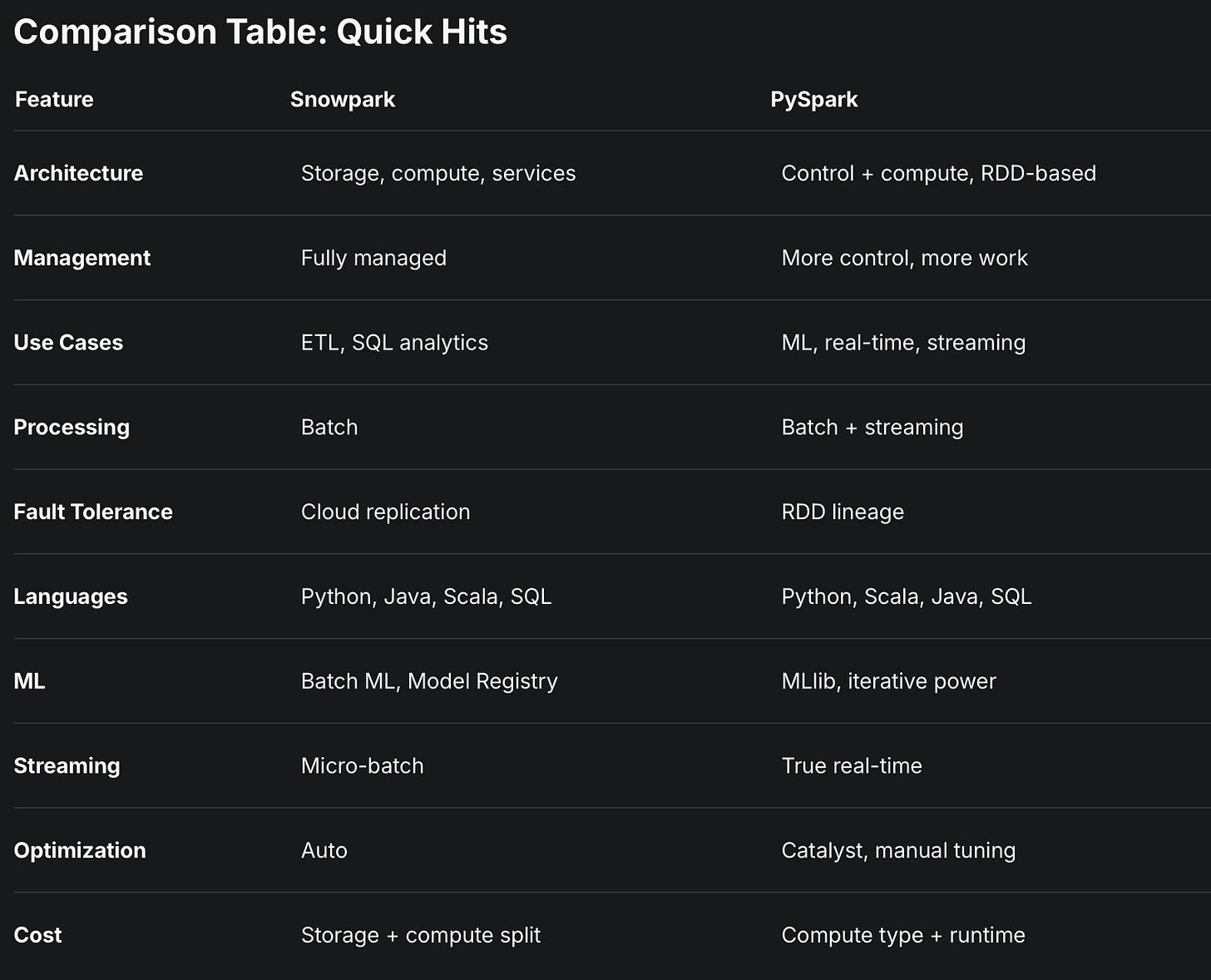

Here’s the deal: Snowpark’s a managed powerhouse tied to Snowflake’s ecosystem. PySpark’s a flexible beast rooted in Apache Spark via Databricks.

I’ll break down the architecture, programming, performance, cost—everything you need to decide. Let’s roll.

Architectural Foundations: The Core Divide

Snowpark: Managed Simplicity

You may love Snowpark when you want to stop babysitting infrastructure. It’s built on Snowflake’s three-layer setup—storage, compute, services.

Storage sits in the cloud, chopped into micro-partitions, columnar format.

Compute? Virtual warehouses that scale on demand.

Services handle the brainwork—query parsing, optimization.

It’s all separate, all elastic.

Why’s that gold? Because you don’t touch cluster configs or data sharding. Snowflake does it. Less headache, more focus on results.

PySpark: Control Freak’s Dream

PySpark on Databricks is different. It’s got a control plane for backend magic and a compute plane for the heavy lifting—serverless or classic, your call.

Built on Spark’s RDDs (Resilient Distributed Datasets), it’s distributed computing with grit.

You got to think about partitioning, caching, cluster sizing. Databricks smooths some edges, but you’re still in the driver’s seat. Why? Flexibility. you can tune it to the nth degree, but it’s work.

Snowpark’s for when you want to delegate ops and move fast. PySpark’s for when you need to own every lever. If your team’s lean, Snowpark saves sanity. If you’ve got Spark wizards, PySpark flexes harder.

Programming Models: How You’ll Build

Snowpark: DataFrames with a SQL Soul

Snowpark’s DataFrame API feels familiar if you’ve touched PySpark. You write Python, Java, or Scala, and it runs in Snowflake’s engine—batch-focused, SQL-native. User-defined functions (UDFs)? Yup, you can sling custom code, executed right in Snowflake’s sandbox. The Snowpark ML API’s there for modeling, paired with a Model Registry. But it’s not iterative. Think batch ML, not neural net loops. Why? Snowflake’s built for analytics, not real-time churn.

PySpark: The Full Toolbox

PySpark’s DataFrame API sits on RDDs—transform, act, repeat. It’s loaded with built-in functions, transformations, and the Catalyst Optimizer turns your code into an execution ninja.

UDFs? Standard, pandas, vectorized—you’ve got options, though they can lag behind native calls. Iterative algorithms? In-memory processing? It’s built for that.

Snowpark’s my pick for clean, SQL-driven pipelines. PySpark wins when I need ML muscle or streaming. If your data’s structured and your workflows are predictable, Snowpark’s a breeze. If you’re pushing boundaries—think real-time or deep learning—PySpark’s your beast.

Performance & Optimization: Speed Meets Smarts

Snowpark: Auto-Pilot Power

Snowpark leans on Snowflake’s optimizer. Micro-partitions mean data’s pre-sliced for efficiency. The services layer figures out what to grab before compute kicks in. You don’t tune much—it’s automatic. Caching’s there, but it’s not in-memory obsessed. Fault tolerance? Snowflake’s cloud replication handles it. Why’s this clutch? You get consistent speed without fiddling.

PySpark: Hands-On Horsepower

PySpark’s Catalyst Optimizer is a multi-stage beast—parsing, planning, optimizing. You may see it slash runtimes, but you need to know your data to max it out. In-memory caching? Huge. Iterative jobs fly when you nail it.

Snowpark’s low-effort performance suits batch warriors. PySpark demands skill but rewards with raw speed on complex tasks. If you hate tuning, Snowpark. If you live for it, PySpark.

Development Experience: Day-to-Day Life

Snowpark: Streamlined Flow

Snowpark’s baked into Snowflake’s web UI. You code, test, deploy—no cluster setup. Stored procedures orchestrate jobs cleanly. DevOps? Supported. It’s minimal fuss. Why? Snowflake’s a walled garden, but a damn nice one.

PySpark: Power User Playground

Databricks notebooks are slick—interactive, collaborative. IDEs plug in seamlessly. Workflows handle complex orchestration, integrating with external tools. But you configure more—clusters, resources. Why? Flexibility comes with responsibility.

Snowpark’s for quick wins, PySpark’s for deep dives. If your team’s small or new, Snowpark’s simpler. If you’re a pro shop, PySpark’s richer.

Language Support: What You’ll Write

Both hit Python, Java, Scala. Snowpark leans hard into SQL—Snowflake’s roots. PySpark’s Scala-native, with Python and Java fully loaded. Why care? Skill fit. SQL pros love Snowpark. Spark vets thrive on PySpark.

Ecosystem & Integrations: Playing Nice

Snowpark: Snowflake’s World

Snowpark ties into Snowflake’s Marketplace—data sharing, apps, analytics focus. It’s tight, controlled. Why? One-stop shop for warehouse fans.

PySpark: Spark’s Universe

PySpark taps Apache’s ecosystem—Hadoop, Hive, Kafka—plus Databricks’ extras. ML integrations shine. Why? It’s broader, messier, powerful.

Snowpark’s cohesive if you’re Snowflake-committed. PySpark’s expansive for diverse stacks.

Cost & Scalability: Your Wallet’s Stake

Snowpark: Predictable Elasticity

Snowflake splits storage (per TB) and compute (warehouse usage). You can scale either without touching the other. Pay-for-use, no surprises. Why? Budget clarity.

PySpark: Configurable Chaos

Databricks prices by compute type, cluster size, runtime. Serverless or classic, plus premium add-ons. You manage scaling—fixed nodes for caching, ephemeral for bursts. Why? Control, but vigilance required.

Snowpark’s easier to forecast. PySpark’s cheaper if you optimize, pricier if you don’t.

Security & Governance: Trust Factor

Both nail security. Snowpark’s got Snowflake’s role-based access, centralized control. Databricks offers Unity catalog - table permissions, cloud identity tie-ins, audit logs. Why choose? Ecosystem fit—Snowflake’s tighter, Databricks’ broader.

Skill Transfer: Switching Lanes

PySpark to Snowpark

DataFrame syntax carries over. You can learn Snowflake’s model—storage, optimization. Streaming’s weaker, so you adjust. Why easy? Less ops, more SQL.

Snowpark to PySpark

RDDs, memory management, Catalyst—new turf. You master clusters, gain ML and streaming power. Why worth it? Flexibility unlocks doors.

Either way, core skills translate. Focus on architecture, not syntax.

Real-World Use Cases: Where They Shine

Snowpark Wins

ELT Pipelines: Structured data, batch transforms—clean, fast.

SQL Analytics: Minimal ops, max insight.

Snowflake Shops: Extend without new infra.

PySpark Wins

ML Workloads: Iterative models, in-memory speed.

Real-Time: Streaming, low-latency needs.

Mixed Data: Structured or not, it eats all.

My Verdict: Pick Your Poison

Snowpark’s your jam if you want simplicity, managed infra, SQL-driven wins. I’d pick it for a lean team or analytics-first play. PySpark’s king if you need ML, streaming, or control. I’d go there for cutting-edge projects or Spark roots.

Look, don’t chase hype. Match your skills, stack, goals. Snowpark’s saved me from cluster hell. PySpark’s let me push limits. Test both—your data, your rules. That’s how you win.